Outraged. Alarmed. We’re failing our kids.

Friday’s news tells a story we should all be outraged about. A 17-year-old from New Jersey is suing the makers of an AI-powered “nudify” tool — after a classmate allegedly used the app to turn a photo of her in a swimsuit, posted at age 14, into a fake nude image.

She now reportedly lives in constant fear that the fabricated image will continue to circulate, or that it has already been used to train the very AI system that victimized her.

Let that sink in. A teenager, wearing a swimsuit in a normal social-media post — and the technology exists to strip her of her clothes virtually and share that image without her consent.

This is not hypothetical. It is real. It is happening. And it raises urgent questions:

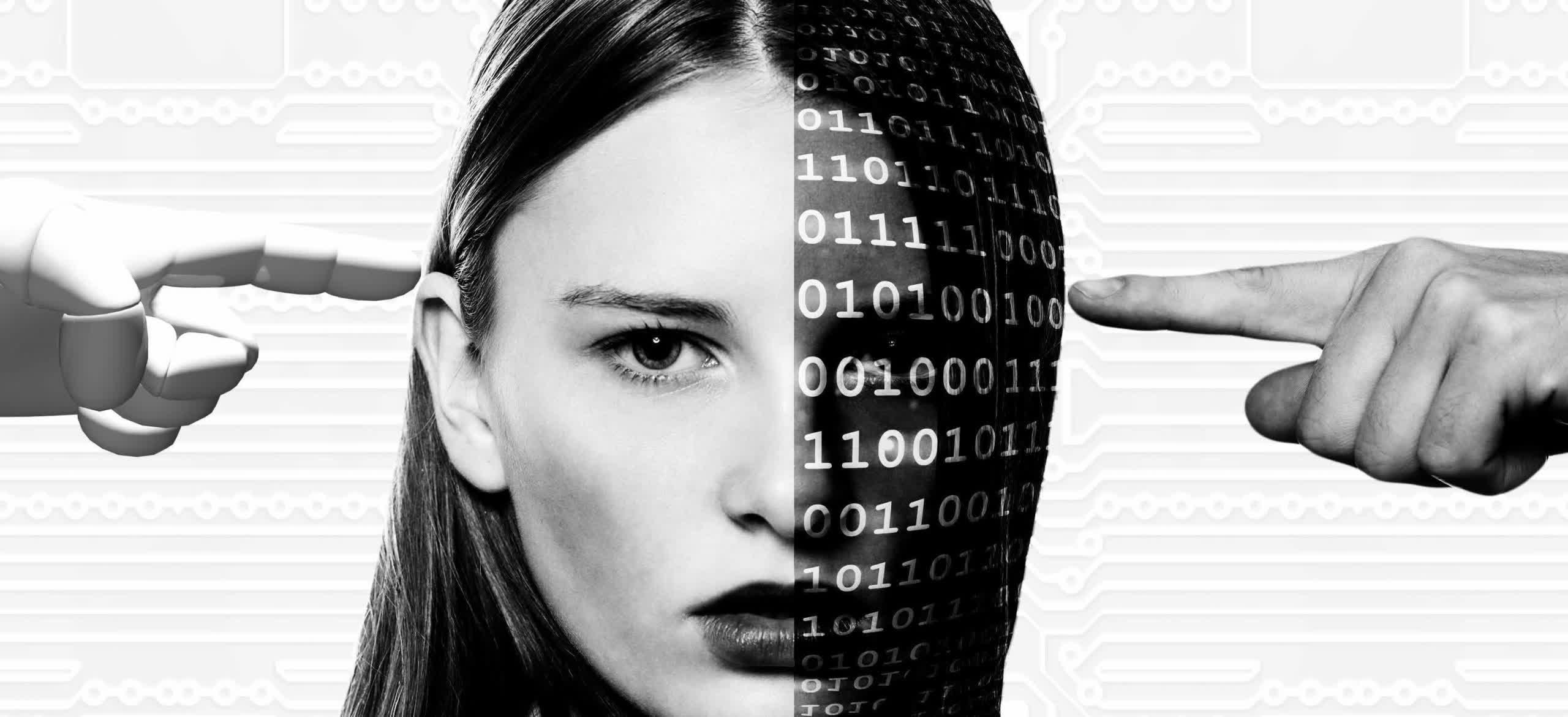

Consent. If a person cannot control how their image is used, especially when AI is introduced, we are eroding the very concept of consent in the digital age.

Accountability. Who is responsible when tools are designed to facilitate sexualized, non-consensual imagery?

Normalization of harm. These “nudify” tools are not fringe anymore. The technology is available, fast, and increasingly abused. The implications for privacy, dignity, and young people’s mental health are enormous.

Regulation and ethics. We are behind. While technology leaps ahead, laws, protections, and platform controls lag far behind. This is a wake-up call for technology leaders, corporate boards, policymakers — and everyday users.

In our industry, we champion innovation, disruption, change. But we must also honor the human on the other end of the screen. To any educator, parent, technologist, or platform executive: this isn’t someone else’s problem. It’s ours. It’s everyone’s.

If we don’t protect the teenager who is haunted by a fake nude we didn’t ask for, we have lost the moral right to claim we are building “better tech.”

To the young woman suing: you shouldn’t have to fight this alone. To the platforms and tools facilitating this: shame on you if you continue to enable harm in the name of “fun” or “innovation.”

And to all of us: let’s do better. There is no progress if dignity is collateral damage.